Hello everyone,

I’m working on a simple explanatory Jupyter Notebook for RAG that demonstrates using Weaviate as a vector database. My setup includes a straightforward connection using the Embedded Weaviate. Here’s the current setup:

client = weaviate.connect_to_embedded(

persistence_data_path="some_path",

environment_variables={

"ENABLE_API_BASED_MODULES": "true",

"ENABLE_MODULES": 'text2vec-transformers',

"TRANSFORMERS_INFERENCE_API": "http://127.0.0.1:5000/"

}

)

I have set up my own transformers API by creating a Flask app with a /vectors endpoint, and it’s working well for embedding models. Now, I’m exploring the generative features of Weaviate, with a focus on a simple example like this one.

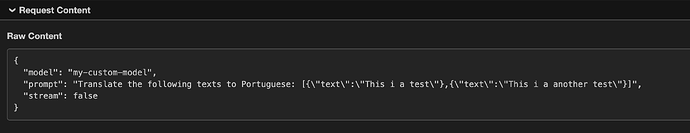

However, our current API is with together.ai, which has OpenAI-compatible endpoints and runs through a proxy to keep the API key hidden from users running the notebook. Unfortunately, the OpenAI integration in Weaviate requires specific models like ‘gpt-4o’. If I try to use another model name (meta-llama/Llama-3.2-3B-Instruct-Turbo), it results in an error:

![]()

Does anyone know if it’s possible to perform these generative tasks with our setup? I was considering a method similar to the transformers embedding. My idea is to add a route in my Flask app to mimic OpenAI API calls, but behind the scenes, use together.ai endpoints through our proxy. However, I haven’t found any documentation in Weaviate that supports such custom configuration. Running these generative models locally is not possible due to resources constraints.

Thank you all in advance.

Lucas